Problem

When you have a lot of data need to be inserted into the database, or so called data import, table locking is inevitable due to the database is ensuring data integrity with the read committed isolation level. When one transaction is required to perform a lot of data insertion or update, it is going to lock the table and therefore could cause more wait time for any other transaction which are currently queuing up for table access. It is recommended to split the huge data insertion or update into multiple transaction which can be completed quickly by writing the query carefully.

Today topic is about making use of table partition switching to reduce number of table lock and you still be able to insert huge amount of records. The idea is coming from Serena Yeoh, I am here to share the concept and how it was implemented.

Concept

Imagine that you need to insert huge amount of records into a table. You worry that your action will cause table locking and others cannot access the table, eventually they get query timeout error or deadlock error from their application. The concept is to have a staging (temporary) table which is created base on the main table which you are going to insert huge amount of records into it. You can just insert all the records into the staging table first temporarily without affecting others who are using the main table. When your data is ready in the staging table, at the main table, split the last partition into a new partition with the size same as the staging table size, then switch the staging table to the main table newly split partition, then merge the split partition back with the last partition.

Challenges

The are a few challenges to implement the above concept. One of them is the identity that you are going to insert into the staging table may already exists in the main table, since it could be used by a different session which is doing record insertion. The identity seed of the main table is incrementing from time to time. Therefore, the main table primary key constraint check will fail and it prevent you from switching the staging table in to the split partition.

Another challenge is when a session is inserting multiple records, at the same time, you have split the last partition to a new partition. The other session which is inserting records, the records will be leak into the new partition. When you have data in the new partition, you cannot switch the staging table to the new partition.

When you have above challenges solved, you will face another problem with partition fragmentation. Imagine that there are multiple session are doing the same table partition splitting and merging, if one fail, it will leave the split partition there with empty data. Although you can protect it with transaction, the split partition can be rollback, but the table identity cannot be rollback because there are other sessions still doing the record insertion, and table identity keep incrementing, rolling back the identity could cause key duplication error later. Anyway, it is very rare that staging table cannot be switch in, unless it contain dirty data. However, it is still manageable because we can fix the data, switch and merge the partition manually later.

The following are the implementation of the concept and the solution for the mentioned challenges:

Another challenge is when a session is inserting multiple records, at the same time, you have split the last partition to a new partition. The other session which is inserting records, the records will be leak into the new partition. When you have data in the new partition, you cannot switch the staging table to the new partition.

When you have above challenges solved, you will face another problem with partition fragmentation. Imagine that there are multiple session are doing the same table partition splitting and merging, if one fail, it will leave the split partition there with empty data. Although you can protect it with transaction, the split partition can be rollback, but the table identity cannot be rollback because there are other sessions still doing the record insertion, and table identity keep incrementing, rolling back the identity could cause key duplication error later. Anyway, it is very rare that staging table cannot be switch in, unless it contain dirty data. However, it is still manageable because we can fix the data, switch and merge the partition manually later.

The following are the implementation of the concept and the solution for the mentioned challenges:

Implementation

CREATE PARTITION FUNCTION [ContactListPartitionFunction] (BIGINT)

AS RANGE LEFT

FOR VALUES (0);

CREATE PARTITION SCHEME [ContactListPartitionScheme]

AS PARTITION [ContactListPartitionFunction]

ALL TO ([PRIMARY]);

Create a partitioned table.

CREATE TABLE [dbo].[ContactList] (

[ContactId] BIGINT IDENTITY (1, 1) NOT NULL,

[FullName] VARCHAR (100) NOT NULL,

[ContactNumber] VARCHAR (50) NOT NULL,

[Address] VARCHAR (500) NOT NULL,

[CreationDate] DATETIME NOT NULL,

CONSTRAINT [PK_ContactList] PRIMARY KEY CLUSTERED ([ContactId] ASC) ON [ContactListPartitionScheme] ([ContactId])

);

Create partitioned indexes for my table.

CREATE NONCLUSTERED INDEX [IX_CreationDate_Sort]

ON [dbo].[ContactList]([CreationDate] ASC)

ON [ContactListPartitionScheme] ([ContactId]);

CREATE NONCLUSTERED INDEX [IX_Contact_Search]

ON [dbo].[ContactList]([FullName] ASC, [ContactNumber] ASC)

INCLUDE([Address])

ON [ContactListPartitionScheme] ([ContactId]);

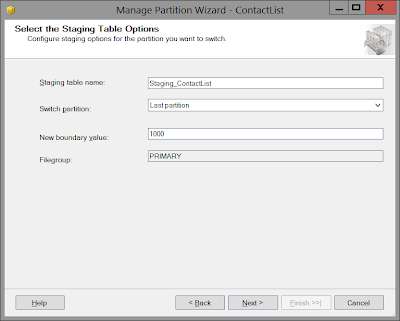

Next, we prepare a temp table (staging table) for the main table. You can do it by using SQL Server Management Studio or writing T-SQL scripts manually.

Right click the main table, then look for Storage menu, click the Manage Partition menu.

Select "Create a staging table for partition switching".

Enter the staging table name, this is the temp table which you are going to insert record into it. Set any new boundary value, this is the value that we need to change it dynamically later in programming way by depending on the number of record in this table.

Run the script immediately.

Now, delete the constraint of the temp table, because we are going to add it later.

Then, proceed to insert multiple records into the temp table. Take note that the identity of the record must be in numeric data type. The reason is because we need to make use of the identity to solve the potential duplicate identity constraint check challenge while switching table partition. The inserted first row of record must always start with identity value 1 and is incremental for the rest of the rows.

Once the data in the temp table is created properly, we can now proceed to split the partition.

The following are the information that we need before performing the data transfer:

- Temp table row count

- Main table last identity

- Partition number

- Previous partition boundary (left)

- Split partition boundary (right)

The following script is the step by step execution for the concept. See the comment for more detail.

DECLARE @rowCount BIGINT

DECLARE @currentIdentity BIGINT

DECLARE @newIdentity BIGINT

DECLARE @partitionNumber INT

DECLARE @leftBoundary BIGINT

DECLARE @rightBoundary BIGINT

DECLARE @sql NVARCHAR(1000)

-- Use transaction to lock the table

for table switching

-- Try to minimize code and reduce as

much wait time as possible

BEGIN TRY

BEGIN TRANSACTION

-- Step 1 : Get the row count of the

temp table

SELECT @rowCount = COUNT(0) FROM [dbo].[Staging_ContactList]

-- Step 2 : Get the last identity of

the main table

SET @currentIdentity = CONVERT(BIGINT, IDENT_CURRENT('[dbo].[ContactList]'))

-- Step 3 : Get the boundary base on

number of record to be inserted

-- This identity = 1 check is required when the table is empty, left boundary must be 0

IF @currentIdentity = 1

SET @leftBoundary = @currentIdentity - 1

ELSE

SET @leftBoundary = @currentIdentity

SET @rightBoundary = @leftBoundary + @rowCount

-- Step 4 : Reseed the table with the

new identity for other session to insert new record with latest identity and

avoid duplicate key constraint failure while table switching

-- Also to reserve identity for the temp table

DBCC CHECKIDENT('ContactList', RESEED, @rightBoundary)

PRINT 'Row Count : ' + CONVERT(VARCHAR, @rowCount)

PRINT 'Current Identity : ' + CONVERT(VARCHAR, @currentIdentity)

PRINT 'Left Boundary : ' + CONVERT(VARCHAR, @leftBoundary)

PRINT 'Right Boundary : ' + CONVERT(VARCHAR, @rightBoundary)

-- Step 5 : Update temp table identity

base on the main table current identity to solve the identity constraint check

challenge

-- When you have the identity value in proper order start from 1, 2, 3, 4... in your temp table

-- And, the last identity before the partition to be switched is 8592

-- Update all the identity in the temp table to 8592+1, 8592+2, 8592+3, 8592+4...

-- End result you will not have duplicate key error occur when switching table

UPDATE [dbo].[Staging_ContactList]

SET ContactId = ContactId + @leftBoundary

-- Step 6 : Get the partition number

base on the new boundary value

SELECT @partitionNumber = $PARTITION.ContactListPartitionFunction(@rightBoundary)

PRINT 'Partition Number : ' + CONVERT(VARCHAR, @partitionNumber)

-- Step 7 : Add check constraint to

temp table to fulfill the criteria for table partition switching

SET @sql = '

ALTER TABLE [dbo].[Staging_ContactList]

WITH CHECK ADD CONSTRAINT [chk_Staging_ContactList_partition_' +

CONVERT(VARCHAR, @partitionNumber) + '] CHECK

([contactID]>N''' + CONVERT(VARCHAR, @leftBoundary) + ''' AND

[contactID]<=N''' + CONVERT(VARCHAR, @rightBoundary) + ''')

ALTER TABLE [dbo].[Staging_ContactList]

CHECK CONSTRAINT [chk_Staging_ContactList_partition_' +

CONVERT(VARCHAR, @partitionNumber) + ']

'

PRINT @sql

EXEC sp_executesql @sql

-- Step 8 : Switch the partition

ALTER TABLE [dbo].[Staging_ContactList]

SWITCH TO [dbo].[ContactList]

PARTITION @partitionNumber;

-- Step 9 : Merge previous partition

with the partition of previous partition

ALTER PARTITION FUNCTION ContactListPartitionFunction ()

MERGE RANGE (@rightBoundary);

ALTER PARTITION FUNCTION ContactListPartitionFunction ()

MERGE RANGE (@leftBoundary);

-- Optional 1 : Drop the temp table

--DROP TABLE

[dbo].[Staging_ContactList]

-- Optional 2 : Truncate table then

drop the check constraint

SET @sql = '

ALTER TABLE [dbo].[Staging_ContactList]

DROP CONSTRAINT [chk_Staging_ContactList_partition_' +

CONVERT(VARCHAR, @partitionNumber) + ']

'

PRINT @sql

EXEC sp_executesql @sql

COMMIT TRANSACTION

END TRY

BEGIN CATCH

SELECT

ERROR_NUMBER() AS ErrorNumber

,ERROR_SEVERITY() AS ErrorSeverity

,ERROR_STATE() AS ErrorState

,ERROR_PROCEDURE() AS ErrorProcedure

,ERROR_LINE() AS ErrorLine

,ERROR_MESSAGE() AS ErrorMessage;

ROLLBACK TRANSACTION

END CATCH

Testing

When you are playing around with the script above, it is useful to query the partition from time to time to visualize what is happening with the table partition by executing the following query:

SELECT p.partition_number AS [Partition No] , g.name AS [FileGroup], p.[rows] AS Rows, r.[value] AS [Boundary] FROM sys.partitions p

INNER JOIN sys.indexes i ON p.object_id = i.object_id AND p.index_id = i.index_id

INNER JOIN sys.partition_schemes s ON s.data_space_id = i.data_space_id

INNER JOIN sys.destination_data_spaces d

ON d.partition_scheme_id = s.data_space_id AND d.destination_id = p.partition_number

INNER JOIN sys.filegroups g ON g.data_space_id = d.data_space_id

LEFT JOIN sys.partition_range_values r

ON r.function_id = s.function_id AND r.boundary_id = p.partition_number

WHERE p.[object_id] = OBJECT_ID('ContactList') AND i.index_id = 1

Performance

The table partition split is going to take some time to complete the process, and during the time it will cause table locking. When the partition size is big, it is going to take even lot more time to split the partition. It is advisable to have multiple small - medium size partitions in one table instead of just one big partition. It is always faster to split a smaller partition.

Security

This concept require you to create and alter table and deal with partitions. If you wonder what security rights is required if you use this implementation, the answer is db_ddladmin or db_owner.If you like this concept or think this is a crazy idea, feel free to drop me a comment. Thanks.